I came across this book entitled Neural Networks and Deep Learning, by Michael Nielsen, and decided to invest some time in reading it. I am hoping to reinforce what I have read thus far (for instance in the book AI Crash Course by Haydelin de Ponteves).

The first chapter in this book covers recognition of hand-written digits. This is a pretty cool use case for AI technology, given that everyone's individual handwriting constitutes a font in and of itself, and even then, there is a certain degree of variation every time an individual hand-writes characters. Who uses technology like this? Well, banks for example might use something like this as part of check processing!

I like the fact that this example is optical in nature - and, it could even be inverted in a Generative AI manner to generate new fonts, or new characters in some context or use case!

One of the first things this book covered was Perceptrons - and explained how Perceptrons are essentially binary (1s and 0s) as result output of action functions. He then moves on to explain that binary results are not always in demand or optimal, and that gradient values (shades of gray) between 0 and 1 are often desired or necessary. And this is why we have Sigmoid Neurons.

This was insightful, because the first book I read (unless I overlooked) never even mentioned the concept of Perceptrons, and jumped right into Sigmoid Neurons.

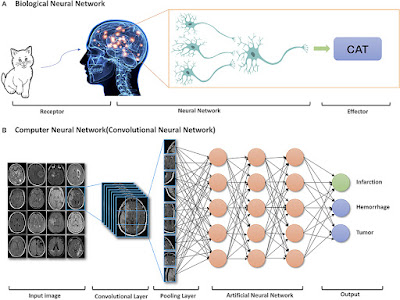

Nielsen then goes on to explain, briefly, the architecture of Neural Networks and embarks on a practical example using the Handwritten Digits Recognition use case.

One thing that was helpful in this chapter, was the fact that he explains how these hidden layers of a neural network "fit together" by use of a practical example. This helps as far as design and modeling of a Neural Network go.

He goes on to discuss the purpose of using bias along with weights, and then goes into a deeper discussion on gradient descent - and stochastic gradient descent. Stochastic Gradient Descent is critical for reinforcement learning because it minimizes the cost function. Minimizing a cost function does no good though, if you can't "remember" it, so the concept of back propagation is required to instill back into the model, this minimization of cost.

I downloaded the code for his Handwriting Recognition example. I immediately figured out by reading the README.txt file (most people ignore this and waste time learning the harder way) that the code was not written for Python3. Fortunately, someone else had ported the code to Python3 - and this was in another Github repository.

In running the code from the Python3 repository, I immediately ran into an error with a dependent library called Theano. This error was listed here: Fix for No Section Blas error

So I was fortunate. This fix worked for me. And we got a successful run! In this example, the accuracy in recognizing handwritten digits was an astounding 99.1%!

This code uses a data sample from MNIST, comprised of 60K samples. The

code by default breaks this into a 50/10 training and validation sample.

So now that we know that we can run the code successfully, what might we want to do next on this code, in this chapter, before moving on to the next chapter?

- Experiment with the number of Epochs

- Experiment with the Learning Rate

- Experiment with the Mini Batch Size

- Experiment with the number of hidden neurons

Here is a table that shows some results from playing with the epochs and mini batch sizes:

test.py

Run 1: Epochs=30, Mini Batch Size=10, Results: 8700/10,000at its peak in epoch 26

Run 2: Epochs=40, Mini Batch Size=10,Results: 9497/10,000 at its peak in epoch 36

Run 3: Epochs=40, Mini Batch Size=20,Results: 9496/10,000 at its peak in epoch 36

So interestingly, the numbers go up each epoch, until a peak is reached, and then the number settles back down for the last 2-3 epochs in these trial runs. It appears, that the number of epochs is a more important coefficient than the mini batch size.

Another test with adjusted learning rates could be interesting to run, as this impacts Gradient Descent quite a bit. As Gradient Descent is a Calculus concept for finding the minimum, when learning rates are too low or too high, it impacts the time and ability for the "ball to drop to the bottom" of said low point.

He also, at the very end, mentions the use of more/different algorithms, referencing the Support Vector Machine (SVM).